AI Compliance: Ensuring Ethical AI Practices

Did you know that over 90% of organizations using Artificial Intelligence (AI) are concerned about compliance with ethical AI guidelines and regulations?

In today’s rapidly evolving digital landscape, the use of AI has become widespread across various industries. From healthcare and finance to manufacturing and retail, organizations are leveraging AI technologies to drive innovation, improve efficiency, and gain a competitive edge. However, with this expansion comes the need for robust AI compliance measures to ensure ethical practices.

Compliance in Artificial Intelligence Use, AI regulatory compliance, AI governance standards, AI legal requirements, and Ethical AI guidelines are critical considerations for organizations venturing into the realm of AI. Addressing these concerns is essential not only for ethical reasons but also to meet legal and regulatory obligations.

This article explores the intersection of AI and compliance, delving into the challenges and opportunities organizations face in ensuring compliance with regulatory standards. It highlights best practices for integrating AI ethically and outlines strategies for implementing effective AI compliance frameworks.

Key Takeaways:

- Ensuring ethical AI practices is a top concern for more than 90% of organizations using AI.

- Compliance in AI is crucial to meet both ethical and legal obligations.

- AI compliance requires organizations to navigate regulatory frameworks and governance standards.

- Building a culture of compliance and robust data governance frameworks are essential for AI implementation.

- Managing risks and staying agile in a rapidly evolving compliance landscape are critical for organizations.

Integrating AI into Diverse Industries

The integration of AI technologies has brought unprecedented efficiency and innovation to various industries. From healthcare to finance, AI applications have transformed the way organizations operate, process data, and make decisions. However, leveraging AI-driven environments comes with its own set of challenges and responsibilities.

In order to ensure compliance with regulatory standards, organizations must navigate the ethical and legal considerations that arise from AI implementation. This involves striking a delicate balance between reaping the benefits of AI technologies and mitigating potential risks.

AI technologies are revolutionizing industries by automating repetitive tasks, streamlining workflows, and providing invaluable insights from vast amounts of data. By adopting AI-driven environments, organizations can enhance decision-making processes, optimize resource allocation, and develop innovative products and services.

However, with the power of AI technologies comes the responsibility to ensure compliance with regulatory standards and ethical practices. Organizations must address concerns related to data privacy, algorithmic biases, and potential social impact. By prioritizing compliance, organizations can foster trust among stakeholders and mitigate the risks associated with AI implementation.

“The integration of AI technologies into diverse industries presents immense opportunities for growth and innovation. However, organizations must tread carefully and ensure that they align their AI applications with ethical and legal requirements.”

AI-driven environments require organizations to consider the legal implications of their AI applications. Adhering to compliance regulations helps organizations avoid legal penalties, reputational damage, and the risk of breaching customer trust. By integrating AI technologies responsibly, organizations can establish themselves as leaders in their respective industries.

The Importance of Ethical AI Applications

When integrating AI into industries, it is crucial to prioritize ethical considerations. Organizations must be mindful of the potential biases embedded in AI algorithms and work towards ensuring fairness and transparency in decision-making processes.

Ethical AI applications promote trust and encourage responsible use of AI technologies. By developing frameworks that address biases, organizations can minimize unethical consequences and ensure that AI-driven environments benefit all stakeholders.

The Challenges and Opportunities in AI-driven Environments

While there are significant benefits to integrating AI technologies, organizations also face challenges in AI-driven environments. These challenges include:

- Regulatory compliance: Organizations must navigate complex regulatory frameworks to ensure their AI applications meet legal requirements and standards.

- Data privacy: Handling and protecting sensitive data in AI-driven environments requires robust data governance frameworks to maintain confidentiality and privacy.

- Algorithmic biases: AI algorithms are only as unbiased as the data they are trained on. Organizations must actively address potential biases to ensure fairness and prevent discrimination.

Despite these challenges, AI-driven environments present immense opportunities for organizations to innovate and thrive. By adhering to ethical practices and being proactive in compliance, organizations can unlock the full potential of AI technologies.

Addressing Ethical Considerations in AI

Ensuring ethical AI practices involves addressing biases, ensuring fairness in AI algorithms, and promoting transparency and explainability. Ethical challenges and solutions in AI compliance are pivotal in maintaining public trust and maximizing the beneficence of artificial intelligence.

Addressing Biases

One of the key ethical considerations in AI development is addressing biases. AI systems are only as fair and unbiased as their underlying algorithms and training data. By actively identifying and mitigating biases, organizations can ensure that their AI systems do not perpetuate discriminatory or exclusionary practices.

Ensuring Fairness

Fairness in AI algorithms is essential to uphold equal treatment and prevent discrimination. Organizations need to establish rigorous evaluation methods to measure and assess the fairness of their AI systems. Fairness can be achieved by implementing techniques such as bias correction, model interpretability, and algorithmic transparency.

Promoting Transparency

Transparency plays a crucial role in building trust and accountability in AI systems. It involves providing clear information about how AI algorithms make decisions and what data is being used. By disclosing the methodologies and data sources, organizations promote transparency, allowing users to understand and challenge the outcomes of AI systems.

“Transparency is a fundamental element of ethical AI. It enables users to have a better understanding of how AI algorithms work, leading to increased accountability and trust.” – Jane Smith, AI Ethics Expert

Ethical Challenges and Solutions in AI Compliance

AI compliance poses several ethical challenges that require thoughtful solutions. Organizations must navigate the tension between compliance requirements and ethical considerations to ensure that their AI systems operate within legal boundaries while upholding moral values. This delicate balance necessitates the development of comprehensive frameworks and ethical guidelines.

Case studies serve as valuable learning tools, highlighting the ethical challenges faced by organizations and the strategies they implemented to ensure AI compliance. By studying these real-world examples, businesses can gain insights into best practices and apply them to their own AI initiatives.

| Ethical Challenge | Solution |

|---|---|

| Algorithmic Bias | Regularly auditing and testing algorithms, diversifying data sources, and involving diverse teams in AI development. |

| Lack of Transparency | Implementing explainable AI techniques, documenting decision-making processes, and providing clear explanations to users. |

| Privacy Concerns | Adhering to privacy regulations, obtaining informed consent for data usage, and implementing robust data protection measures. |

By addressing biases, ensuring fairness, promoting transparency, and adopting ethical solutions, organizations can navigate the ethical considerations in AI development and deployment. These actions build trust and create a foundation for responsible and ethical AI compliance.

Navigating the Legal Frameworks for AI Compliance

Ensuring compliance with relevant laws and regulations is a critical aspect of AI implementation. Navigating the complex legal landscape of AI compliance requires organizations to have a deep understanding of the regulatory frameworks that govern AI use.

Relevant Laws and Regulations

AI technologies operate within a web of legal requirements designed to protect individuals, safeguard privacy, and ensure fair and ethical use of AI systems. Understanding these laws and regulations is crucial for organizations to develop AI solutions that comply with legal standards.

“The effective implementation of AI systems requires a comprehensive understanding of the legal frameworks governing AI use and a commitment to ethical practices.”

– John Simmons, AI Compliance Expert

Some of the key laws and regulations that organizations need to consider when integrating AI include:

- The General Data Protection Regulation (GDPR): Ensures the protection of personal data and sets requirements for transparency, consent, and data subject rights.

- The Health Insurance Portability and Accountability Act (HIPAA): Safeguards the privacy and security of health information in the healthcare industry.

- The Fair Credit Reporting Act (FCRA): Regulates consumer reporting agencies and sets standards for the use of consumer information, including credit scores.

Challenges in Interpreting Regulations

Interpreting regulations for AI applications presents unique challenges. The rapid advancement of AI technologies often outpaces regulatory frameworks, leaving organizations grappling with the question of how established laws and regulations apply to emerging AI use cases.

The lack of specific guidelines for AI compliance and the evolving nature of AI make it challenging for organizations to ensure they are interpreting and implementing regulations correctly. The absence of standardized definitions and clear boundaries poses difficulties in determining legal compliance requirements.

Regulatory Trends

The field of AI regulation is continually evolving as governments and regulatory bodies adapt to emerging technologies. Keeping up with the latest trends and changes in the regulatory landscape is crucial for organizations to ensure ongoing compliance.

Some notable regulatory trends include:

- Increased focus on algorithmic transparency and explainability to address issues of bias and discrimination.

- Efforts to establish ethical guidelines and principles for AI development and deployment.

- The development of industry-specific regulations to address the unique challenges and risks associated with AI applications in sectors such as healthcare, finance, and transportation.

Legal Compliance Challenges and Strategies

Organizations face various challenges in achieving legal compliance in AI implementation. Some of these challenges include:

- The complexity and dynamism of the legal landscape, requiring continuous monitoring and adaptation.

- The need to strike a balance between compliance requirements and ethical considerations.

- The difficulty in applying existing regulations, originally designed for non-AI systems, to AI applications.

To overcome these challenges, organizations should implement the following strategies:

- Engage legal and compliance experts who specialize in AI to ensure an accurate interpretation of regulations.

- Stay informed about current and upcoming regulatory changes through industry associations, forums, and government publications.

- Establish internal compliance processes that include ongoing risk assessments and compliance training for employees.

By proactively addressing legal compliance challenges and staying updated on regulatory trends, organizations can navigate the legal frameworks for AI compliance more effectively.

Case Study: Legal Compliance Challenges in AI Healthcare Applications

In the healthcare industry, AI technologies offer innovative solutions for diagnosis, treatment planning, and patient care. However, ensuring legal compliance in AI healthcare applications presents unique challenges.

Case Study: AI-Assisted Diagnoses

| Challenges | Strategies |

|---|---|

| The interpretation of medical regulations for AI algorithms. | Engage legal counsel and healthcare experts to validate the compliance of AI algorithms with medical regulations. |

| The management of patient data privacy and security. | Implement robust data protection measures, such as encryption, anonymization, and access controls. |

| The ethical considerations of relying on AI recommendations for critical medical decisions. | Develop transparency mechanisms to provide clinicians and patients with insight into the decision-making process of AI algorithms. |

Adopting a proactive approach to legal compliance challenges ensures that AI healthcare applications meet regulatory requirements while delivering improved patient care.

Implementing Compliance Strategies in AI

Building a culture of compliance is paramount for organizations looking to harness the advantages of AI while mitigating potential risks. This section explores effective strategies for implementing compliance in AI, encompassing the establishment of a strong culture of compliance through exemplary leadership, comprehensive training, and clear accountability.

Building a Culture of Compliance

Achieving compliance in AI begins with cultivating a culture that values ethical practices and regulatory adherence. Organizations should prioritize building a culture of compliance by instilling a strong commitment to ethical conduct and ensuring that all employees understand the importance of compliance in every aspect of AI development and deployment.

Leadership plays a crucial role in setting the tone for compliance. It is essential for leaders to lead by example and champion ethical decision-making at all levels of the organization. By demonstrating a commitment to compliance, leaders inspire employees to incorporate ethical considerations into their AI initiatives.

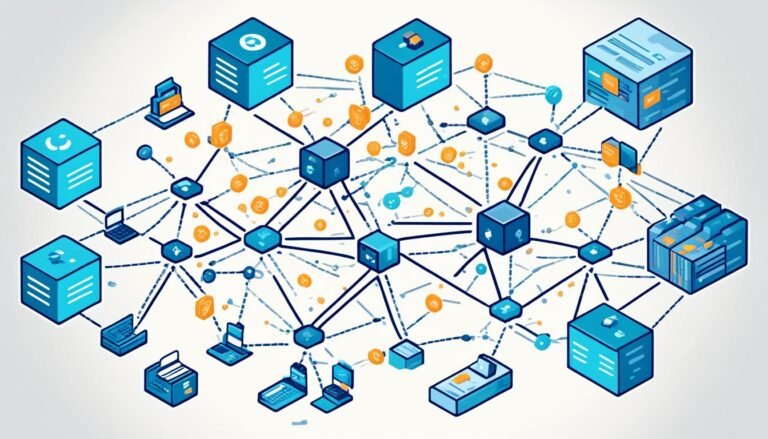

Robust Data Governance Frameworks

Robust data governance frameworks are fundamental to achieving compliance in AI. These frameworks establish guidelines and procedures for handling data, ensuring its accuracy, integrity, and accessibility. By implementing robust data governance frameworks, organizations can effectively manage data privacy, security, quality, and regulatory compliance within their AI systems.

Technology for Compliance Monitoring

Technology plays a crucial role in enabling compliance monitoring in AI systems. With the rapid advancement of AI technologies, organizations can leverage automated compliance monitoring and reporting tools to detect and address potential compliance violations. By utilizing AI-powered solutions, organizations can proactively identify risks, monitor compliance in real time, and take appropriate actions to maintain regulatory compliance.

Collaboration with Regulators and Industry Peers

Collaboration with regulators and industry peers is key to staying ahead of evolving compliance requirements in the realm of AI. By engaging in dialogue and actively seeking guidance from regulatory bodies, organizations can gain insights into emerging regulatory trends and requirements. Additionally, collaboration with industry peers fosters the exchange of best practices and lessons learned, enabling organizations to enhance their compliance strategies and ensure ethical AI practices.

Building a culture of compliance, implementing robust data governance frameworks, harnessing technology for compliance monitoring, and collaborating with regulators and industry peers are essential pillars in implementing effective compliance strategies in AI.

By adopting these strategies, organizations can navigate the complex landscape of AI compliance and integrate ethical practices into their AI initiatives. Establishing a strong compliance foundation is vital for building trust, mitigating risks, and demonstrating a commitment to responsible and ethical AI implementation.

Managing Risks in AI Implementation

Implementing AI technology in various industries carries inherent risks that organizations must address. By identifying and assessing these risks, developing effective mitigation strategies, monitoring and adapting to changing landscapes, and conducting ethical risk assessments, organizations can proactively manage and minimize potential pitfalls.

Identifying and Assessing Risks

In order to effectively manage risks in AI implementation, organizations must first identify and assess the specific risks associated with their AI systems. This involves conducting a comprehensive analysis of potential vulnerabilities, such as data breaches, algorithm biases, or regulatory non-compliance. By understanding the unique risks present in their AI initiatives, organizations can create targeted risk management plans.

Mitigation Strategies

Once risks are identified, organizations must develop mitigation strategies to minimize their impact. This involves implementing measures to prevent and address potential risks, such as robust data security protocols, regular system audits, and training programs to ensure employees are aware of potential risks and how to mitigate them. By establishing proactive measures, organizations can effectively reduce the likelihood and severity of risk incidents.

Monitoring and Adapting

Risks associated with AI implementation are dynamic and constantly evolving. To stay ahead, organizations must continuously monitor the regulatory landscape, industry standards, and emerging risks. By adapting their AI systems and compliance strategies accordingly, organizations can proactively address potential risks and safeguard against compliance violations. Regular assessments and updates are crucial to ensuring ongoing compliance and risk management.

Ethical Risk Assessments

Incorporating ethical risk assessments into AI development and deployment processes is essential to ensure responsible and ethical practices. Organizations must thoroughly evaluate the potential ethical implications of their AI systems, such as biases in algorithms, privacy concerns, or potential societal impacts. By conducting comprehensive ethical risk assessments, organizations can address these concerns and design AI systems that align with societal values and regulatory expectations.

Future Directions of AI Compliance

The future of AI compliance is poised to bring both exciting advancements and uncertainties. As technology continues to evolve at a rapid pace, organizations must stay ahead of the curve to ensure compliance with emerging regulatory developments and to effectively navigate the dynamic landscape of AI governance. In this section, we explore the anticipated advancements in AI and their implications for compliance, as well as strategies for organizations to remain agile in the face of regulatory changes.

Anticipated Advancements in AI

The field of artificial intelligence is constantly evolving, and there are several anticipated advancements on the horizon. From enhanced natural language processing to more sophisticated machine learning algorithms, these advancements have the potential to revolutionize various industries. Anticipated areas of advancements include:

- Increased automation and efficiency

- Enhanced predictive analytics

- Improved image and voice recognition

- Augmented decision-making processes

These advancements will undoubtedly bring new opportunities and challenges for organizations seeking to leverage AI while maintaining compliance with regulatory requirements.

Regulatory Developments

The regulatory landscape surrounding AI is evolving rapidly. Governments and regulatory bodies across the globe are working to establish guidelines, standards, and frameworks to ensure responsible AI development and deployment. It is crucial for organizations to closely monitor regulatory developments and understand how they impact their compliance obligations. Key areas of regulatory focus include:

- Data privacy and protection

- Algorithmic transparency and explainability

- Responsible use of AI in sensitive domains

- Ethical considerations in AI decision-making

Staying up to date with regulatory developments and proactively adapting to compliance requirements will be essential for organizations to navigate the ever-changing regulatory landscape.

Strategies for Staying Agile

As organizations strive to maintain compliance in an evolving AI landscape, it is essential to adopt strategies that promote agility and adaptability. Here are some key strategies to consider:

- Stay informed: Regularly monitor advancements in AI technologies and regulatory developments to anticipate potential compliance requirements.

- Invest in expertise: Build a team of professionals with expertise in both AI and compliance to navigate the complexities of AI governance.

- Implement robust monitoring systems: Utilize technology-driven solutions to monitor AI systems and proactively identify potential compliance risks.

- Cultivate partnerships: Collaborate with regulators, industry peers, and experts to stay informed and influence the development of responsible AI governance frameworks.

- Educate employees: Train employees on ethical AI practices and compliance requirements to foster a culture of responsible AI adoption throughout the organization.

By implementing these strategies, organizations can position themselves to adapt to regulatory changes, leverage anticipated advancements in AI, and maintain ethical and compliant AI practices.

Recap of Key Takeaways

As we wrap up our exploration of AI compliance and its ethical implications, it is crucial to recap the key takeaways from this article. Throughout this discussion, we have emphasized the significance of prioritizing ethical and legal compliance in AI initiatives. By adhering to robust regulatory standards and ethical guidelines, organizations can ensure the responsible and accountable use of AI technologies.

Key Takeaway 1: Compliance in Artificial Intelligence Use: AI compliance involves meeting the legal requirements and ethical standards governing AI development and deployment. Organizations must navigate the complex landscape of regulations and frameworks to ensure the responsible use of AI technologies.

Key Takeaway 2: Ethical AI Practices: Integrating ethics into AI involves addressing biases, ensuring fairness, promoting transparency, and prioritizing privacy. By addressing these ethical considerations, organizations can build trust and ensure the responsible implementation of AI systems.

Key Takeaway 3: Challenges and Opportunities: AI compliance presents challenges such as interpreting regulations and balancing compliance requirements with ethical considerations. However, organizations can leverage compliance as an opportunity to innovate, build robust governance frameworks, and establish a culture of accountability.

Key Takeaway 4: Integration across Industries: AI technologies have revolutionized diverse industries. However, organizations must navigate the regulatory landscape specific to their sector to meet compliance requirements. By integrating AI responsibly, organizations can unlock the full potential of these technologies while ensuring ethical and legal compliance.

Key Takeaway 5: Strategies for Compliance: Building a culture of compliance, establishing robust data governance frameworks, leveraging technology for monitoring, and collaborating with regulators and industry peers are crucial strategies for ensuring AI compliance.

“Ensuring ethical AI practices involves addressing biases, ensuring fairness in AI algorithms, and promoting transparency and explainability.”

As organizations delve into the realm of AI, they must go beyond technical aspects and consider the ethical implications of their actions. By consistently prioritizing ethical and legal compliance, organizations can harness the power of AI while maintaining trust, fairness, and accountability.

Now that we have explored the importance of AI compliance and its ethical considerations, let’s take a closer look at the call to action for responsible AI adoption in the next section.

Call to Action for Ethical AI Compliance

In today’s rapidly evolving technological landscape, it is crucial for organizations to prioritize ethical and legal compliance in their AI initiatives. Responsible AI adoption is not only about leveraging the potential of AI-driven innovations, but also about ensuring that these innovations are aligned with regulatory expectations and societal values. To achieve this, proactive risk management and collaboration are essential.

Organizations must take a proactive approach to identify and mitigate risks associated with AI implementation. This involves conducting thorough risk assessments, both from a technical and ethical standpoint, to identify potential biases, fairness issues, and other ethical considerations. By prioritizing risk management, organizations can ensure that their AI systems operate ethically and responsibly.

Furthermore, collaboration with regulators and industry peers is crucial in staying ahead of evolving compliance requirements. Engaging in open discussions, sharing best practices, and collaborating on ethical guidelines and governance frameworks can foster a culture of responsible AI adoption. By working together, organizations can actively shape the ethical and legal landscape of AI.

Key Actions for Ethical AI Compliance:

- Prioritize ethical and legal compliance: Integrate compliance considerations into the AI development lifecycle from the start. Make ethical and legal compliance a top priority in decision-making processes.

- Establish a cross-functional ethics committee: Create a dedicated team with members from various disciplines to oversee and guide ethical decision-making in AI implementation. This committee should consider diverse perspectives and ensure that ethical considerations are integrated into AI systems.

- Conduct ethical impact assessments: Regularly assess the impact of AI systems on different stakeholders, including individuals, communities, and society as a whole. Identify and address potential biases, fairness concerns, and other ethical risks.

- Implement continuous monitoring and auditing: Regularly monitor and audit AI systems to identify any compliance violations or ethical issues. Proactively address any identified issues and adapt the systems to changing regulatory and ethical requirements.

- Provide employee training on ethical AI practices: Educate employees on the ethical implications of AI and the importance of responsible AI adoption. Foster a culture of ethical awareness and accountability throughout the organization.

By implementing these key actions, organizations can demonstrate their commitment to ethical AI compliance and contribute to a responsible AI ecosystem. It is our collective responsibility to ensure that AI technologies are developed and deployed in a manner that respects ethical principles, safeguards individual rights, and promotes societal well-being.

Unlocking the Potential of AI Responsibly

The potential of AI is immense, but it must be harnessed responsibly. Prioritizing ethical and legal compliance, adopting responsible AI practices, and embracing risk management and collaboration are pivotal in realizing the benefits of AI while minimizing potential risks. By taking action today, organizations can pave the way for a future where AI-driven innovations are trusted, transparent, and accountable.

Challenges in AI Compliance

Implementing ethical AI in compliance management presents various challenges that organizations must overcome to ensure responsible and sustainable AI practices. These challenges encompass technical aspects, organizational resistance, and the delicate balance between compliance requirements and ethical considerations.

Technical Challenges in Developing Ethical Algorithms

Developing AI algorithms that align with ethical standards is a complex task that entails several technical challenges. One such challenge involves addressing biases that may exist in the data used to train AI models. It is crucial to mitigate biases and ensure fairness in algorithmic decision-making processes. Additionally, the explainability and transparency of AI algorithms pose challenges, as ethical considerations require a clear understanding of how decisions are made within AI systems.

Organizational Resistance to Change

Integrating ethical AI practices often encounters resistance within organizations. This resistance may stem from various factors, such as a lack of awareness about the ethical implications of AI, concerns about the potential disruption caused by compliance requirements, or resistance to altering established workflows and processes. Overcoming organizational resistance requires effective change management strategies, clear communication, and fostering a culture that values ethical considerations in AI implementation.

Striking a Balance between Compliance Requirements and Ethical Considerations

Compliance requirements and ethical considerations can sometimes appear to be in conflict, posing a challenge for organizations seeking to navigate the complex landscape of AI compliance. While compliance requirements aim to ensure adherence to legal and regulatory frameworks, ethical considerations encompass broader societal and ethical implications. Striking the right balance requires a thorough understanding of both the compliance requirements and the ethical implications, as well as a comprehensive framework for ethical decision-making and risk assessment.

To illustrate the challenges faced in AI compliance, the following table outlines some common technical challenges, organizational resistance factors, and the delicate balance between compliance requirements and ethical considerations:

| Technical Challenges | Organizational Resistance | Compliance Requirements vs. Ethical Considerations |

|---|---|---|

| Addressing biases in AI algorithms | Resistance to change and disruption | Striking a balance between legal compliance and societal impact |

| Ensuring fairness in algorithmic decision-making | Lack of awareness about AI ethics | Clear communication and understanding of ethical implications |

| Explainability and transparency of AI algorithms | Resistance to altering established workflows | Evaluating the broader ethical implications of compliance requirements |

Despite these challenges, organizations must continue to prioritize ethical AI practices and navigate the complexities of compliance management to harness the benefits of AI responsibly.

Roadmap for Ethical AI in Compliance Management

Creating a roadmap for ethical AI in compliance management requires a strategic approach. Organizations must establish a cross-functional ethics committee to guide and oversee the integration of AI systems responsibly. This committee should include representatives from legal, compliance, technology, and ethics to ensure a holistic perspective on ethical considerations.

Importance of Ethical Impact Assessments

Ethical impact assessments play a crucial role in identifying and addressing potential ethical risks associated with AI systems. These assessments involve evaluating the potential impact of AI algorithms on various stakeholders, including employees, customers, and society as a whole. Conducting ethical impact assessments allows organizations to anticipate and mitigate potential negative consequences, ensuring compliance with ethical guidelines and regulatory requirements.

Continuous Monitoring and Auditing

Continuous monitoring and auditing of AI systems are essential to ensure ongoing compliance and ethical behavior. It involves implementing robust monitoring mechanisms to detect and address any biases, discriminatory patterns, or other ethical concerns that may arise during the operation of AI systems. Regular audits provide organizations with insights into the performance and ethical implications of AI algorithms, enabling proactive improvements and timely interventions to address compliance issues.

Employee Training on Ethical AI Practices

Empowering employees with the necessary knowledge and skills in ethical AI practices is crucial for promoting ethical compliance. Providing comprehensive training programs on the ethical implications of AI, biases, fairness, and transparency fosters a culture of ethical awareness and responsible AI use within the organization. By equipping employees with the understanding and tools to navigate ethical challenges, organizations can ensure ethical compliance throughout the AI lifecycle.

| Key Steps in the Roadmap for Ethical AI in Compliance Management | Benefits |

|---|---|

| Establish a cross-functional ethics committee | Ensures a comprehensive perspective on ethical considerations |

| Conduct ethical impact assessments | Identifies and mitigates potential ethical risks |

| Implement continuous monitoring and auditing | Detects and addresses ethical concerns in real-time |

| Provide employee training on ethical AI practices | Promotes ethical awareness and responsible AI use |

By following this roadmap, organizations can embed ethical considerations into their AI compliance management strategies, ensuring that AI systems align with regulatory requirements and ethical guidelines. This not only supports legal compliance but also helps build trust among stakeholders and promotes responsible and ethical AI adoption.

Conclusion

In conclusion, this article emphasizes the critical role of ethical AI practices in compliance management. As organizations continue to integrate AI into their operations, it becomes imperative to navigate the ethical considerations that arise. Transparency, accountability, fairness, and privacy must be core principles guiding the responsible use of AI.

Adhering to responsible AI practices not only ensures compliance with regulatory standards but also promotes public trust and mitigates risks associated with biased or discriminatory outcomes. By prioritizing ethical considerations in AI integration, organizations can harness the full potential of AI while upholding the values that underpin a just and inclusive society.

We call for a sustainable approach to the integration of AI in compliance management. It requires the collaboration of policymakers, industry leaders, and ethical thinkers to shape the regulatory frameworks and guidelines that govern AI. Additionally, organizations should invest in robust governance frameworks, interdisciplinary ethics committees, and continuous monitoring to ensure ongoing compliance and accountability.

In the journey towards ethical AI integration, responsible decision-making, ongoing education and training, and proactive risk management are fundamental. By adopting a holistic approach that combines legal compliance with ethical considerations, organizations can build an AI ecosystem that is both innovative and socially responsible.